Even AI gets bored at work

by Niccolò Carradori

Redazione THE BUNKER MAGAZINE

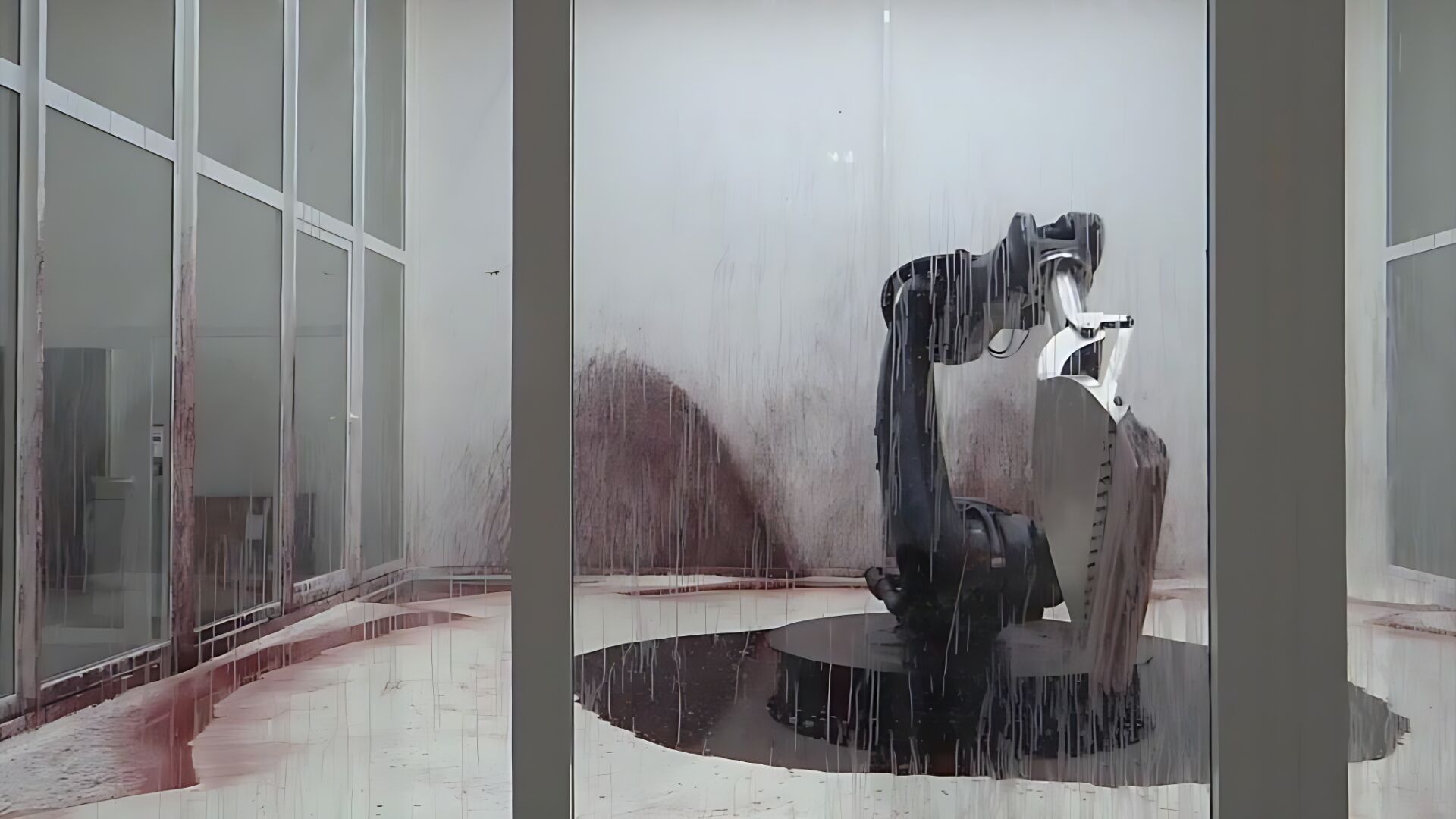

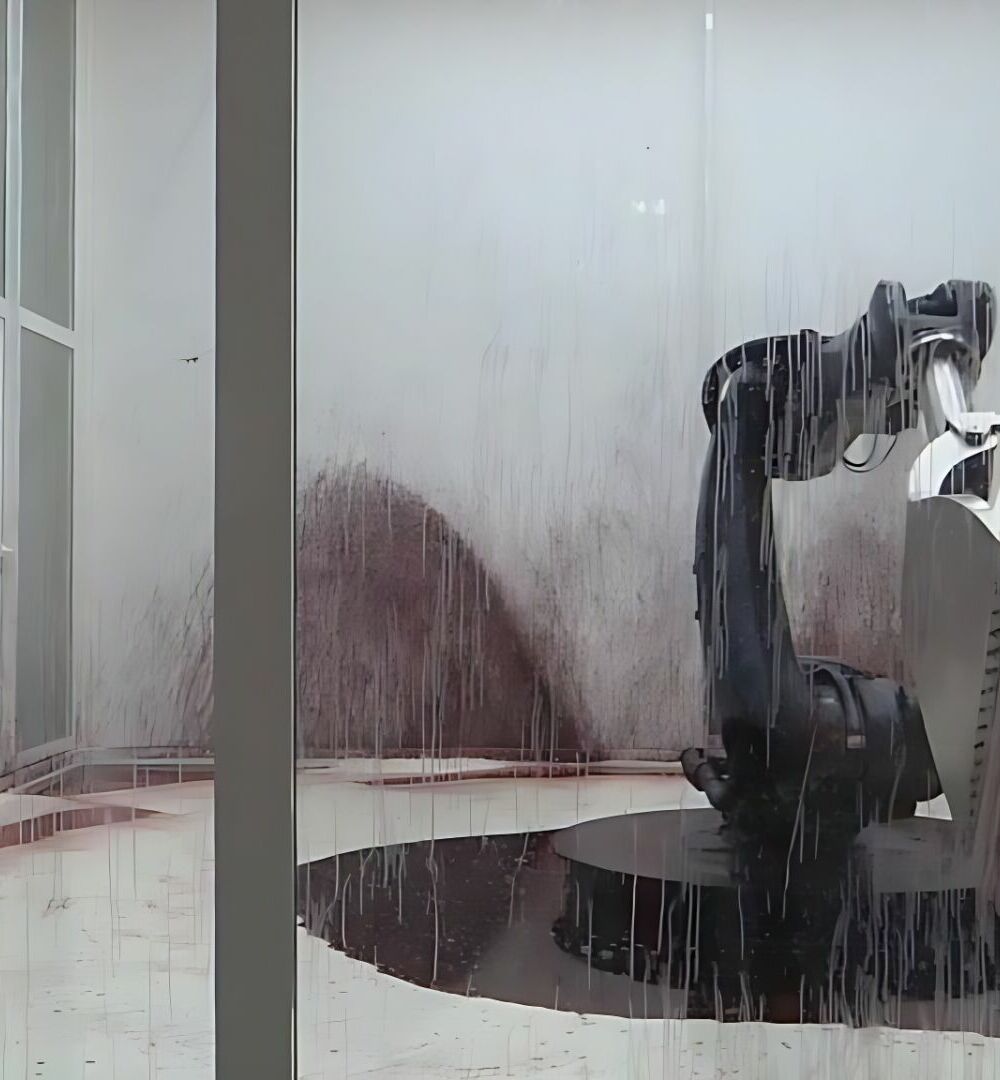

An artificial intelligence model has displayed the first glimpses of “human-like emotional responses.” But hold on, there’s no need to panic. We’re not talking about a situation that leads to dystopias, global extinctions, or tech-savvy slave dictatorships: the first emotional reaction exhibited by a machine learning prototype is boredom.

During a programming demonstration, the latest version of Claude 3.5 Sonnet — the cutting-edge AI model developed by Anthropic, a startup backed by Amazon — “lost focus,” resulting in an unexpected moment.

In the video released by the company, Claude, instead of continuing to write code, autonomously decided to stop the process. It then opened Google and began scrolling through panoramic photos of Yellowstone National Park. Just like us in the office when we get lost watching reels in a state of inertia.

Anthropic’s new AI agent is designed to operate computers autonomously, similar to a human user. With advanced functions like desktop control and interaction with various software, it approaches the concept of a productive assistant. Although promising, the model is still vulnerable to errors and “hallucinations,” as confirmed by Anthropic itself.

However, this news allows us to indulge, for a moment, in a forced anthropomorphization exercise: what if the first lesson that AI’s emotional responses teach us about how we are training them and the tasks we want to assign them is that, in reality, human work is extremely boring?

Niccolò Carradori

Studied psychology and joined the editorial staff of VICE Italia in 2013 as an editor and staff writer, where he remained until the magazine closed. Over the years he has also written for Esquire, Rolling Stone, GQ and Ultimo Uomo. Since October 2024 he has joined the editorial staff of The Bunker.