Adversarial Examples: Hidden threats to neural networks

by Fabio Gnassi

Although the functioning of machine learning algorithms may seem abstract and difficult to decipher, they rely on a series of structured processes that make them vulnerable to specific threats. Understanding the risks these tools are exposed to is essential for developing greater awareness in their use.

The following is an interview with Pau Labarta Bajo, a Mathematician who transitioned to a Machine Learning Engineer and later became an educator in the field of Machine Learning. Driven by his passion for problem-solving, Pau participated in the International Mathematical Olympiad, which reflects his lifelong enthusiasm for tackling complex challenges — a key aspect of machine learning. He began his career over a decade ago as a Quantitative Analyst at Erste Bank and subsequently worked as a Data Scientist at Nordeus, a mobile gaming company that is now part of Take-Two Interactive. It was at Nordeus that he encountered his first real-world machine learning project, a pivotal experience that cemented his interest in the field.

Since then, Pau has freelanced at Toptal, contributing to diverse projects ranging from self-driving cars and financial services to delivery apps, time-series predictions for online retail, and solutions for health insurance providers. Two years ago, Pau started sharing his knowledge with the community on platforms like Twitter/X and LinkedIn. His first course, The Real-World Machine Learning Tutorial, has garnered over 500 satisfied students, and he continues to share valuable free content through his social media channels.

One of the risks faced by machine learning systems is known as “Adversarial Machine Learning.” Could you explain what this involves?

The term AML (Adversarial Machine Learning) refers to a set of techniques and procedures designed to compromise the functioning of a machine learning model. Machine learning models can be applied in various fields, such as developing applications for computer vision or generating text and images. Each of these categories of models can be attacked using processes known as “Adversarial Techniques,” which alter the behaviour of a model by introducing manipulated data, referred to as “Adversarial Examples.”

The way a model is compromised depends on how these tools operate. Neural networks are “differentiable with respect to the input,” meaning even minimal variations in input data can significantly affect the quality of the generated outputs. Inputs are the raw data provided to the model, while outputs are the expected results. The model analyses and processes inputs to identify patterns, which are then associated with a specific output. A model can be attacked at two distinct stages: during the training phase or during the inference phase.

Interestingly, humans can also be deceived in similar ways. For instance, comparing the human brain to a computer vision system, both can fall victim to optical illusions, as seen when observing Escher’s paradoxical drawings.

How do these attacks work?

Firstly, it is important to distinguish between attacks occurring in the digital world and those in the physical world. Digital attacks target both the training and inference phases. The inference phase occurs when a pre-trained model is used to make predictions or decisions (outputs) based on new data (inputs). Corrupting a model during this phase involves providing it with manipulated inputs to alter its functioning, leading to incorrect outputs.

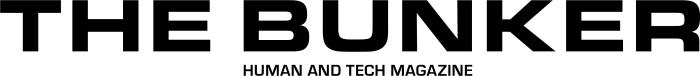

For example, in the case of a model trained for object recognition, such an attack might involve using a modified image. The modification is carried out using algorithms that generate a perturbation applied to the original image. This perturbation subtly alters some pixels, remaining imperceptible to the human eye but detectable by the neural network, which consequently produces an incorrect classification.

Another category of digital attacks involves the contamination of training datasets, known as Poisoning Attacks. This type of attack manipulates the dataset by introducing specific biases or censoring relevant information, negatively affecting the model’s learning process.

What about in the physical world?

Attacks in the physical world exclusively target the inference phase. An interesting example comes from research demonstrating that it is possible to compromise the computer vision systems of autonomous vehicles by applying stickers to road signs, causing the system to misinterpret their meaning. Here too, Adversarial Examples are generated using algorithms that overlay a layer of noise onto the original image. For the human eye, which relies on a semantic perception of objects, the meaning of the sign remains unchanged. However, for a computer vision system analysing the individual pixels of an image, these small modifications can be significant, resulting in classification errors.

Another example of Adversarial Attacks in the real world pertains to Large Language Models (LLMs). Due to the vast amount of data used during their training phase, these models can generate text containing sensitive or inappropriate information. To mitigate this, researchers have implemented post-processing techniques that block the release of certain outputs. However, some prompts can bypass these safeguards to produce the desired outputs.

In these cases, Adversarial Examples take on different meanings depending on whether they are interpreted by a machine or a human. The prompts, generated using symbols and characters that form nonsensical texts for a human reader, can deceive the model and compromise its functioning.

How can models be protected from these attacks?

When discussing the security of machine learning models, it is crucial to consider the inherent asymmetry between the efforts required to defend a model and those needed to attack it. Models developed over months by teams of expert researchers, using GPU clusters and costing millions of dollars, can be compromised within hours. It is, therefore, much easier to attack a model than to defend it.

Researchers designing a model are aware of the risks posed by Adversarial Examples, which is why they have developed several techniques to counter such attacks. One of the most common is Adversarial Training, which involves creating synthetic data using Adversarial Techniques and incorporating them into the training process. For example, if training a computer vision model to recognise images of cats, the dataset would include both authentic and manipulated images of cats. This teaches the model that, despite the corruption, the images still represent the same subject.

Regarding Poisoning Attacks, particularly in the case of Large Language Models (LLMs), it is important to highlight how the presence of fake news or malicious information is closely linked to how these models are trained. For years, training approaches relied on constructing enormous text datasets, often without paying close attention to the content of such data. In other words, vulnerabilities introduced by an Adversarial Attack could directly stem from the dataset creation phase.

In recent years, however, there has been a shift in approach: researchers now aim to develop smaller, more efficient, and secure models built using better-curated datasets. This increased focus on data quality represents a significant step towards reducing the likelihood of compromising a model’s effectiveness.